Far-infrared fine structure lines, especially

[CII]  157.7µm and

[OI]

157.7µm and

[OI]  63.2 µm,

have long been used for estimating density and radiation intensity in

photo-dissociation regions (PDR) (e.g.

Hollenbach & Tielens

1997).

ISO has provided for this topic a wealth of data, whose interpretation is

creating controversy and challenging theoretical models.

Malhotra et al. (1997)

showed that while two thirds of normal galaxies have

63.2 µm,

have long been used for estimating density and radiation intensity in

photo-dissociation regions (PDR) (e.g.

Hollenbach & Tielens

1997).

ISO has provided for this topic a wealth of data, whose interpretation is

creating controversy and challenging theoretical models.

Malhotra et al. (1997)

showed that while two thirds of normal galaxies have

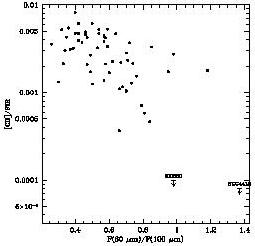

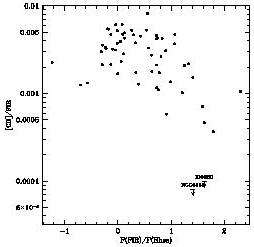

(CII) /

(CII) /

(FIR) in the range 2-7 x

10-3, this ratio

decreases on average as the 60-to-100 or the

(FIR) in the range 2-7 x

10-3, this ratio

decreases on average as the 60-to-100 or the

(FIR) /

(FIR) /

(B) ratios increase, both indicating

more active star formation

(Figure 3). They linked this decrease

to elevated heating intensities, which ionize grains and thereby reduce the

photo-electric yield.

(B) ratios increase, both indicating

more active star formation

(Figure 3). They linked this decrease

to elevated heating intensities, which ionize grains and thereby reduce the

photo-electric yield.

|

|

Figure 3. The CII deficiency in active star forming galaxies from Malhotra et al. (1997). | |

The same CII deficiency is also observed in ultra-luminous infrared

galaxies (Fischer et

al. 1999;

Luhman et al. 1998),

who favor optical depth

effects as the origin of the effect. This is hard to reconcile however

with a similar deficiency effect occurring for

[NII]  121.9 µm

as well, but not for

[OI]

121.9 µm

as well, but not for

[OI]  63.2µm.

Detailed discussion is found in

Malhotra et al. 1999,

Fischer et al. 1999, or

Luhman et al. 1999.

63.2µm.

Detailed discussion is found in

Malhotra et al. 1999,

Fischer et al. 1999, or

Luhman et al. 1999.