The subtle distortion of shapes of distant galaxies by gravitational lensing is a powerful probe of both the mass distribution and the global geometry of the universe. It has, however, turned out to be one of the most technically difficult of the cosmological probes. This section will cover the range of applications of weak lensing (which we will sometimes abbreviate to WL), the recent and planned weak lensing surveys, and the technical aspects of weak lensing image processing and control of systematics. By covering the latter subjects in some detail (including some methods that we think have been under-appreciated or under-utilized), we hope to stimulate further progress and be helpful to readers who are already experts in weak lensing.

This section is organized as follows: we begin with a qualitative overview of weak lensing and its uses (Section 5.1). We then go into a mathematical treatment of the various statistics that can be used and their dependences on the background cosmology and matter power spectrum (Section 5.2). We then review the observational results from recent weak lensing surveys (Section 5.3). Section 5.4.1 discusses the statistical errors and cosmological sensitivity of cosmic shear surveys at a rule-of-thumb level; we expect this to be a useful entry point for readers interested in understanding survey design. We then turn to more technical aspects of survey design and analysis, including source redshift estimation and the galaxy populations of optical/near-IR and radio surveys (Sections 5.4.2-5.4.4), CMB lensing (Section 5.4.5), the measurement of galaxy shapes (Section 5.5), and astrophysical uncertainties (Section 5.6). We summarize the major systematic errors and mitigation strategies (Section 5.7). We finally consider the advantages of a space mission for weak lensing (Section 5.8) and prospects for the future (Section 5.9).

Some of the material in this section is technical and in a first reading may be either skipped or skimmed; but given that so much of the promise of weak lensing depends on these issues, we felt compelled to include them. The more technical sections have been denoted with an asterisk (*). They may be thought of as analogous to, e.g., the "Track 2" material in Misner et al. (1973).

5.1. General principles: Overview

The images of distant galaxies that we see are distorted by gravitational lensing by foreground structures. In rare cases, such as behind clusters, one observes strong lensing: the deflection of light by massive structures can result in multiple images of the same background galaxy. More often, however, images of galaxies are subjected only to weak lensing: a small distortion of their size and shape, typically of the order of 1%. Since one does not know the intrinsic size or shape of a given galaxy, weak lensing can only be measured statistically by examining the correlations of shapes in deep and wide sky surveys. However, the payoff if these statistical correlations can be measured is enormous: weak lensing provides a direct measure of the distribution of matter, independent of any assumptions about galaxy biasing. Since this distribution can be predicted theoretically, even in the quasilinear regime, and since its amplitude can be directly used to constrain cosmology (unlike for galaxy surveys where one must marginalize over the bias), weak lensing has great potential as a cosmological probe.

In principle, one may attempt to observe either the shearing of galaxies (shape distortion) or their magnification (size distortion). In practice, the shape distortions have been used much more widely, since the mean shape of galaxies is known (they are statistically round: as many galaxies are elongated on the x-axis as on the y-axis) and the scatter in their shapes is less than the scatter in their sizes.

A variety of statistical approaches have been used to extract information from weak lensing shear (see later subsections for references). The simplest is the angular shear correlation function, or its Fourier transform, the shear power spectrum. These are related to integrals over the matter power spectrum along the line of sight, and as such in the linear regime at low redshift they scale as ∝ Ωm2 σ82. 28 Since the angular power spectrum is rather featureless, more information can be extracted via tomography — the measurement of the shear correlation function as a function of the redshifts of the galaxies observed, including the use of cross-correlations between redshift slices. Information on the relation between galaxies and matter can be obtained via galaxy-galaxy lensing, i.e., the correlation of the density field of nearby galaxies with the lensing shear measured on more distant galaxies. In the linear regime, the galaxy-galaxy lensing signal scales as ∝ b Ωm σ82 and thus provides information on the bias of the lensing galaxies, while in the nonlinear regime it probes individual galaxy halos and hence places constraints on the halo occupation distribution (Section 2.3). Combination of this with the galaxy clustering signal (which scales as ∝ b2 σ82) enables one to eliminate the bias and measure Ωm σ8. The scaling of the galaxy-galaxy lensing signal as a function of the source redshift, known as cosmography, depends purely on geometric factors and hence can be used to partially 29 construct a distance-redshift relation. Finally, the low-redshift matter distribution is non-Gaussian, so higher-order statistics such as the bispectrum or 3-point shear correlation function carry additional information.

For all of the applications of weak lensing to cosmology, deep wide-field imaging is essential. One can see this from a simple order-of-magnitude estimate. For a scatter in galaxy shapes of σγ ~ 0.2, measuring a 1% shear with unit signal-to-noise ratio requires ~ 400 galaxies (0.2 / √400 ≈ 0.01). Measuring the amplitude of density perturbations to 1% accuracy requires that this be done over ~ 104 patches of sky, giving a requirement of order 107 galaxies, which for a density of 15 resolved galaxies per arcmin2 amounts to surveying 200 deg2 of sky. This is the scale of the largest current surveys such as CFHTLS; in practice the errors from these surveys are likely to be closer to several percent due to "factors of a few" that we have dropped here, and due to the inclusion of systematic errors. The eventual goal of the weak lensing community is one or more "Stage IV" surveys (such as LSST on the ground and Euclid and WFIRST in space) that would measure shapes of ~ 109 galaxies and achieve an additional order of magnitude in precision. Such surveys will have to face the daunting task of reducing systematic errors by another order of magnitude.

There are unfortunately many sources of these systematic errors, and most of the effort of the weak lensing community has been devoted to defeating them. One is the measurement of galaxy shapes: while gravitational lensing by a large-scale density perturbation can coherently align the images of many galaxies, this can also arise from shaking of the telescope or optical aberrations. The accurate determination of the point-spread function (PSF) of the telescope (usually based on observations of stars) and removal of its effects is thus critical. This problem gets much worse if one tries to model galaxies with sizes similar to or smaller than the PSF. High-resolution, stable imaging can help with this problem, motivating placement of future instruments at the best ground-based sites or in space. The determination of redshifts for the large number of source galaxies is also a concern. It is not practical to obtain a robust spectroscopic redshift of every galaxy, and hence "photometric redshifts" — estimates of galaxies' redshifts based on their broadband colors — are used. These must be calibrated with well-known biases, scatters, and outlier distributions. Finally, there are astrophysical uncertainties: galaxies can suffer "intrinsic alignments" (non-random orientations), and the matter power spectrum may deviate from pure CDM simulations at small scales. Much of our discussion here will be focused on the methodologies that have been developed to suppress systematics at each stage of the observations and analysis.

5.2. Weak lensing principles: Mathematical discussion

We will now go into greater detail on the mathematical aspects of weak lensing, both the construction of the weak lensing field and the various statistics that one can extract from it. The modern theoretical formalism of weak lensing traces back largely to the papers of Blandford et al. (1991), Miralda-Escudi (1991), and Kaiser (1992), though one can find roots in the much earlier papers of Kristian and Sachs (1966) and Gunn (1967).

5.2.1. Deflection of light in cosmology

Gravitational lensing gives a mapping from the intrinsic, unlensed image of the sources of light on the sky — the source plane — to the actual observable sky — the image plane. Our ultimate goal is to extract information about the statistics and redshift dependence of this mapping and use it to constrain cosmological parameters. Our task here is thus two-fold. First, we must derive the mapping function that relates the source to the image plane. However, since we do not know the intrinsic appearance of the sources, we cannot directly infer the lens mapping from observations. Therefore, our second task will be to determine what properties of the lens map can be measured, and with what accuracy.

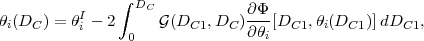

In a fully general context, the lens mapping can be obtained by taking an observer and following the geodesics corresponding to that observer's past light cone. We will make some simplifying approximations here, namely that: (i) the spacetime is described by a Friedmann-Robertson-Walker metric with scalar perturbations and negligible anisotropic stresses (appropriate for nonrelativistic matter, scalar fields, and Λ); (ii) deflection angles are sufficiently small that we may use the flat-sky approximation; (iii) the evolution of perturbations is slow enough that we may neglect time derivatives of the gravitational potential Φ in comparison to spatial derivatives (i.e., nonrelativistic motion); and (iv) such perturbations are small enough that we may compute the lens mapping only to first order in perturbation theory. 30 Within these approximations, we may write the angular coordinates (θ1, θ2) of a light ray projected back to comoving distance Dc (see eq. 7) in terms of the position (θ1I, θ2I) in the image plane as 31

|

(57) |

where  is the Green's function,

is the Green's function,

|

(58) |

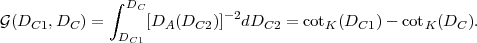

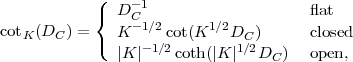

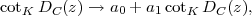

Here cotk(Dc) is the cotangentlike function,

|

(59) |

with the dimensional curvature K defined in equation (8), and Φ is the Newtonian gravitational potential.

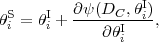

The potential derivative in equation (57) is evaluated at the position of the deflected ray θi(DC1), so it represents an implicit solution to the light deflection problem. However, in linear perturbation theory (see our assumption iv above), we may evaluate it at the position of the undeflected ray. This is known as the Born approximation. When we do this, it is permissible to pull the angular derivative out of the integral and write

|

(60) |

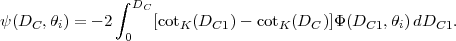

where ψ is the lensing potential:

|

(61) |

Here it is important to remember that Dc represents the distance to the sources; one integrates over lens distances DC1.

Equation (60) provides the mapping from the observed image plane to the source plane, θiS(θiI). In what follows, we will assume that this mapping is one-to-one: this is known as the regime of weak lensing. In the small portion of sky covered by very massive objects, the alternate regime of strong lensing occurs, in which several points in the image plane map to the same point in the source plane. Strong lensing is an important probe of the matter distribution in clusters, but we will not pursue it in this article; we briefly discuss some applications of strong lensing to cosmic acceleration in Section 7.10.

5.2.2. Cosmic shear, magnification, and flexion

We have now accomplished our first task: deriving the lens mapping from the matter distribution. However, we now need a way to classify the observables in the lens mapping. The potential ψ is of course not observable itself: like the Newtonian gravitational potential, its zero-level is arbitrary. Its angular derivative ∂ψ / ∂θi is the deflection angle: the difference between the true position of a source θiS and its apparent position θiI. However, since sources (in practice, galaxies) can be at any position, we cannot measure the deflection angle either.

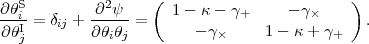

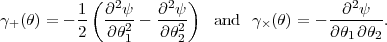

Let us now consider the second derivative of the lensing potential. It is simply the Jacobian of the mapping from image to source plane:

|

(62) |

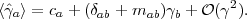

We have separated the 3 independent entries in the symmetric 2 × 2 matrix of partial derivatives into 3 components: the magnification (or convergence) κ and the 2 components of shear, γ+ and γW. The magnification has three effects:

Magnification is a "scalar" in the sense that it is invariant under rotations of the (θ1, θ2) coordinate axes.

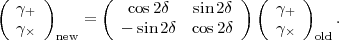

The shear stretches the galaxy along one axis and squeezes on the other: the image of an intrinsically round galaxy appears elongated along the θ1 axis if γ+ > 0 and along the θ2 axis if γ+ < 0. The γW component stretches and squeezes along the diagonal (45°) axes. The shear is a "spin-2 tensor" in the sense that under a counterclockwise rotation of the coordinate axes by angle δ, it transforms as

|

(63) |

If all galaxies were round, then each galaxy would provide a direct estimate of the shear, since we could find the values of (γ+, γW) that transformed an initially circular galaxy into the observed image. In reality, galaxies come in many shapes, and any such estimate of the shear components will have some standard deviation σγ known as the shape noise. But in an ensemble average sense galaxies are round — there are as many galaxies in the universe elongated along the θ1 axis as the θ2 axis. Thus, if we take N galaxies in the same region of sky, we may expect that the shear components in that region can be measured with a standard deviation of ~ σγ / √N. 32

Several caveats are in order at this point, and they form the basis for most of the technical problems in weak lensing. One is that a circular galaxy re-mapped by the Jacobian (eq. 62) becomes an ellipse, but since in the real sky one does not observe a population of galaxies with homologous elliptical isophotes, there is no unique procedure to estimate the shear. Moreover, real telescopes, even in space, have finite resolution, and the observed image is convolved with a PSF that smears the galaxy and may introduce spurious elongation on some axis. These two problems together are referred to as the shape measurement problem. A more fundamental issue is that real galaxies are not randomly oriented: they have preferred directions of orientation that are correlated with each other and with large-scale structure, and thus contaminate statistical measures of the cosmic shear field. This is known as the intrinsic alignment problem. Finally, as already mentioned above, relating the lensing potential ψ to the gravitational potential Φ(z), and hence to cosmological parameters, requires accurate knowledge of the source galaxy redshift distribution, presenting the photometric redshift calibration problem. We will discuss all of these problems in Sections 5.4-5.7.

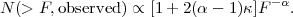

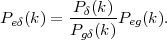

Measuring magnification κ has proven more difficult than measuring shear. One might imagine comparing the size, magnitude, or abundance of galaxies in some region of sky to a typical or "reference" value, but there is a very wide dispersion in galaxy sizes and magnitudes, and since some galaxies are too faint to observe even in deep surveys one cannot measure such a thing as the total number of galaxies. Rather, one can measure the cumulative number of galaxies brighter than some flux threshold, N(> F). If the number counts have a power-law slope α, i.e. N(> F) ∝ F-α, then magnification will perturb this distribution by a factor

|

(64) |

There are two competing effects here: in regions of higher magnification the galaxies appear brighter, which gives the 2ακ factor in equation (64), but there is also the dilution of galaxy number, which is responsible for the "-1" term. Unfortunately, for optical galaxies the observed number count slope is close to the critical value α≈ 1 for which magnification is not measurable. Moreover, the intrinsic clustering of galaxies gives large fluctuations in the number density that greatly exceed those due to lensing effects. For these reasons, magnification has lagged behind shear as a cosmological probe, and the cosmic magnification signal was not seen until Scranton et al. (2005) measured it using cross-correlation of foreground galaxies and background quasars. Minard et al. (2010) provide a more detailed analysis, using color information to simultaneously detect lensing magnification and reddening of quasars by dust correlated with intervening galaxies.

The most promising route to utilizing the cosmic magnification signal is to use scaling relations that relate the size of a galaxy (as quantified by, e.g., the half-light radius) to parameters that are magnification-independent and can be measured in photometric surveys (Bertin and Lombardi 2006), such as the surface brightness, the Sersic index, or (for AGN) variability amplitude. Huff and Graves (2011) present a first application of this "photometric magnification" method to galaxies, and Bauer et al. (2011) an application to quasars.

After shear and magnification comes the third derivative of the potential, i.e. the variation of shear and convergence across a galaxy. This effect is called the flexion, and it manifests itself via asymmetric banana and triangle-like distortions of an initially circular galaxy (Goldberg and Bacon 2005). Flexion has been measured by several groups (e.g. Leonard et al. 2007, Velander et al. 2011, Leonard et al. 2011), and there is a growing literature on the theory of flexion measurement that parallels the formalism required for shear measurement (e.g. Massey et al. 2007c, Schneider and Er 2008, Rowe et al. 2012). However, because of the extra derivative it is sensitive mainly to structure at the very smallest scales, so it is primarily a tool for cluster lensing rather than cosmological applications on larger scales.

5.2.3. Power spectra and correlation functions*

Just as for any other random field in cosmology, one may construct statistics for the cosmic shear field. The most popular are the power spectrum and its real-space equivalent, the correlation function.

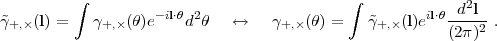

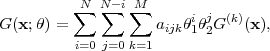

To construct the power spectrum, we take the Fourier transform of the shear field,

|

(65) |

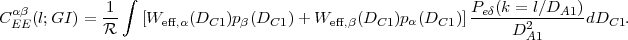

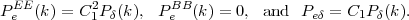

When considering the shear produced by a plane wave perturbation of the lensing potential ψ(θ), it is convenient to rotate the Fourier-space components from the coordinate axis basis to a basis aligned with the direction of the wavevector, which is a preferred direction in the problem. The rotated components are called the E-mode and B-mode:

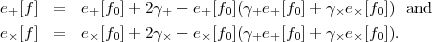

|

(66) |

where tanϕl = l2 / l1, with l1 and l2 being the components of l in the pre-rotated coordinate system. Thus the E-mode of the shear field corresponds to galaxies that are stretched in the direction of the wave vector and squashed perpendicular to it, whereas the B-mode corresponds to stretching and squashing at 45° angles. One may then define the power spectra:

|

(67) |

and similarly for CEB(l) and CBB(l). Rotational symmetry of structure in the universe guarantees that these depend only on the magnitude of l and not its direction, and reflection symmetry guarantees that CEB(l) = 0.

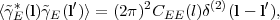

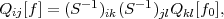

In order to compute these power spectra, we need to express the Fourier modes in terms of those of the lensing potential. From the definition, equation (62), the shear is seen to be the derivative of the deflection angle and hence the second derivative of the lensing potential,

|

(68) |

Using the replacement ∂ / ∂θi → ili, we find in Fourier space

|

(69) |

Substitution into equation (66) implies that

|

(70) |

We thus arrive at the remarkable conclusion that cosmic shear possesses only an E-mode; the B-mode shear must vanish, and we have CBB(l) = 0. Confirming this prediction of vanishing B-mode provides a valuable, though not foolproof, test for systematics in WL surveys.

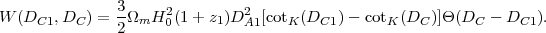

The E-mode shear power spectrum is simply (l2 / 2)2 times the lensing potential power spectrum. The latter may be found from the Limber (small-angle) approximation 33 in terms of the Newtonian potential power spectrum, yielding

|

(71) |

(Here the power spectrum is evaluated at the redshift corresponding to DC1.) We may put this in a more familiar form by recalling Poisson's equation, which tells us that the potential and matter density perturbations are related by

|

(72) |

yielding

|

(73) |

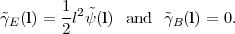

where the lensing window function 34 is 35

|

(74) |

The window function describes the contributions to lensing of sources at Dc from lens structures at distance DC1. Note that it vanishes as the lens approaches the source (DC1 → Dc). In this equation, DA1 is the comoving angular diameter distance (eq. 9) to DC1: in a curved universe DA1 ≠ DC1. Note that in a flat universe, the window function reduces to

|

(75) |

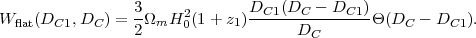

One may also define the angular correlation function of the shear for two galaxies separated by angle ϑ. Since the shear is a tensor, this is more complicated than the correlation function for scalars. Without loss of generality, we may rotate the coordinate system so that the galaxies are separated along the θ1-axis, and then take the + and × components of the shear. We then define the shear correlation functions,

|

(76) |

As in the scalar case, these are related to the power spectra:

|

(77) |

where J0 and J4 are spherical Bessel functions. The expression for CWW is similar, but with PEE and PBB switched. The correlation function {C++(ϑ), CWW(ϑ)}, if measured over all scales, contains exactly the same information as the power spectrum {CEE(l), CBB(l)}, as one can be derived from the other. Therefore, the choice of which to measure is usually a technical one based on the ease of data processing and handling of covariance matrices. The condition for no B-modes, CBB(l) = 0 ∀ l, is more complicated in correlation-function space.

An infinite number of other second-order statistics (i.e., expectation values containing two powers of shear) can be constructed, such as the aperture-mass variance (Schneider et al. 1998), ring statistics (Schneider and Kilbinger 2007), and finite-interval orthogonal basis decompositions (a.k.a. COSEBIs, Schneider et al. 2010). These alternative statistics were introduced because they have useful properties from the point of view of data processing or systematics control — e.g., for separation of E and B modes, or restriction to a particular range of scales - but all of them are expressible as integrals over the power spectrum or correlation function.

Formulae such as (73) and (77) may be generalized to the full sky, as was first done for CMB polarization (Kamionkowski et al. 1997, Zaldarriaga and Seljak 1997), but for cosmic shear most applications involve small angular scales where the flat-sky approximation suffices. 36

Having built the formalism to describe the statistics of weak lensing, we can now consider the proposed ways of using it to measure cosmology. Some methods will depend only on the expansion history of the universe, while others are sensitive to the growth of perturbations.

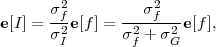

5.2.4. Method I: Cosmic Shear Power Spectrum*

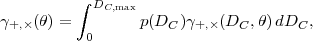

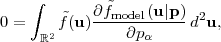

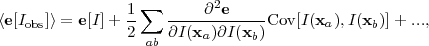

The conceptually simplest approach to using WL is to collect a sample of source galaxies, obtain an estimator for the shear at each galaxy, measure the correlation function or power spectrum, and do a comparison to equation (73). Of course not all galaxies are at the same redshift, but there is a probability distribution of distances p(Dc), and the observed mean shear in a particular region of sky is then

|

(78) |

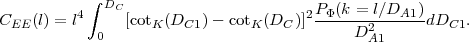

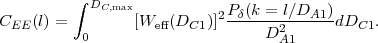

where DC,max is the comoving distance to the farthest galaxy in the slice. The power spectrum of this field can then be written as

|

(79) |

This is similar to equation (73) with W replaced by an effective window function,

|

(80) |

which is simply the usual window function appropriately weighted over the source galaxies.

The cosmic shear power spectrum CEE(l) is sensitive to many cosmological parameters. Being an integral over the matter power spectrum, it is ∝ σ82 in the linear regime, although its behavior in the nonlinear regime is closer to ∝ σ83. It also contains two powers of Ωm, so we expect that the most important dependences in the problem are that the WL power spectrum scales as ~ Ωm2 σ83. This is qualitatively correct, but the matter power spectrum and the mapping between DA and Dc at finite redshift contain sensitivities to all of the cosmological parameters, and so a full answer to the question "what does the shear power spectrum constrain?" requires us to actually do the integral to obtain CEE(l).

The sensitivity to every parameter is both a virtue of the WL power spectrum and its greatest fault: the featureless WL power spectrum contains too many parameter degeneracies. One way to break these degeneracies is to combine WL with other probes, as discussed in Section 8. However, there are also ways of using WL that provide additional information and break these degeneracies internally, as we now discuss.

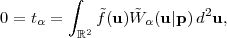

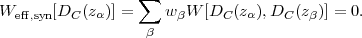

5.2.5. Method II: Power Spectrum Tomography*

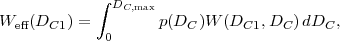

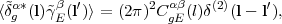

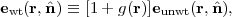

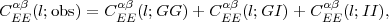

We can improve on the WL power spectrum constraints if we can split the source galaxies into redshift slices. In most practical cases, this would be done with photometric redshifts. In this case, instead of having a single power spectrum, we have N(N + 1) / 2 power spectra and cross-spectra; if we denote the slices by α, β ∈{1,2,...N}, then these spectra are

|

(81) |

where Weff,α is the effective window function for the α slice. Note that because the window functions are multiplied, this power spectrum depends only on the matter power spectrum at redshifts closer than that of the nearby slice, i.e. at z < min{zα, zβ}. This makes sense because a given lens structure must be in front of both sources to contribute to the shear cross-correlation. Lensing analysis that splits samples by redshift and uses the redshift scalings to constrain cosmology is known as tomography.

Like the shear power spectrum, the tomographic spectra are sensitive to both the background geometry and the growth of structure: the shear power spectrum at l depends on the Dc(z) relation, on Pδ(k = l / DA;z) as a function of redshift, and on the curvature K. 37 With a single power spectrum CEE(l) there is no hope of disentangling these functions with WL alone. One might hope that having the tomographic cross-spectra as a function of zα and zβ would allow the relevant degeneracies to be broken. Unfortunately, such a program runs into three problems:

Despite these drawbacks, tomographic power spectra have far fewer parameter degeneracies than the shear power spectrum alone. More importantly, having N(N + 1) / 2 power spectra provides many additional opportunities for internal consistency tests and rejection of systematic errors.

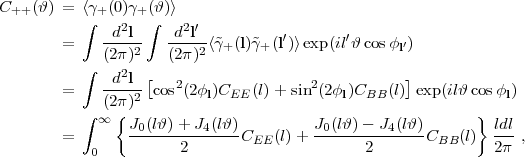

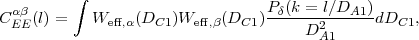

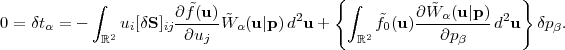

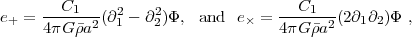

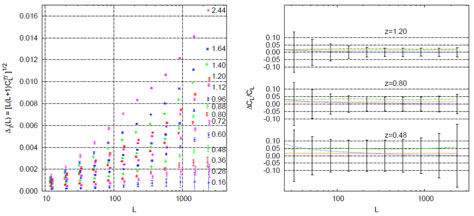

Some examples of theoretical tomographic power spectra are shown in Fig. 16.

|

Figure 16. The E-mode shear power spectra predicted for the WMAP 7-year best fit cosmology (Ωm = 0.265, σ8 = 0.8, H0 = 71.9 km s-1 Mpc-1). The curves show power spectra for sources at z = 0.5 (bottom), 1.0, and 2.0 (top). The diagonal line shows the shot noise contribution at a source density of neff = 10 galaxies per arcmin2; for this power spectrum measurement the shot noise scales as neff-1. At small scales, where the noise power spectrum exceeds the signal, it is not possible to measure individual structures in the weak lensing map. However, with sufficient sky coverage, high-S/N measurement of the power spectrum or correlation function is still possible (see Section 5.4.1, particularly eq. 96). |

5.2.6. Method III: Galaxy-galaxy Lensing*

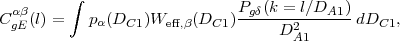

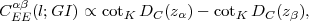

A third way to use weak lensing is to look not just at the shear power spectrum but at its correlation with the distribution of foreground galaxies. This subject is known as galaxy-galaxy lensing (GGL), and it is a powerful probe of the relation between dark matter and galaxies. The angular cross-power spectrum between the galaxies in one redshift slice α (the "foreground" or "lens" slice) and the E-mode shear in a more distant slice β (the "background" or "source" slice) is defined by

|

(82) |

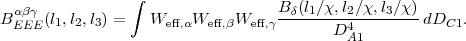

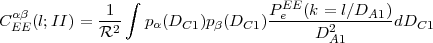

where δgα is the 2-dimensional projected galaxy overdensity and δgα is its Fourier transform, and α and β represent redshift slices. It can be computed via Limber's equation as

|

(83) |

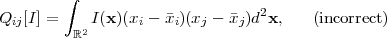

where Pg δ(k) is the 3-dimensional galaxy-matter cross-spectrum. The real-space correlation function of galaxy density and shear is

|

(84) |

In the case where the foreground galaxy slice (α) is narrow - either due to use of spectroscopic foregrounds or high-quality photo-zs - the probability distribution in Limber's equation (eq. 83) becomes a δ-function, and the galaxy-matter cross-spectrum can be obtained.

One can also measure GGL by computing the mean tangential shear (i.e., shear in the direction orthogonal to the lens-source vector) of background galaxies around foreground galaxies as a function of radius. This view of the measurement is taken in many papers, but it is (almost) mathematically equivalent to correlating the shear field of the background galaxies with the density field of the foreground galaxies.

From the perspective of dark energy studies, the principal advantage of GGL over the shear power spectrum is observational: the shear is being correlated with galaxies rather than itself. A spurious source of shear, e.g. from imperfections in the PSF model, is a source of systematic error in the shear power spectrum, but in GGL it is only a source of noise because it is equally likely to arise in regions of high and low foreground galaxy density. The principal disadvantage of GGL is that its interpretation requires assumptions about the galaxies, which must ultimately be justified empirically.

Galaxy-galaxy lensing can be used in the linear, the weakly nonlinear, and the fully nonlinear regimes:

Yoo and Seljak (2012) provide an extensive discussion of the cosmological constraints that can be derived from the combination of GGL and galaxy clustering, on small and large scales, in the simplified case where one isolates the population of central galaxies, so that there is one galaxy per dark matter halo.

Cluster-galaxy lensing is similar to GGL, but one takes clusters of galaxies rather than individual galaxies as the reference points (Mandelbaum et al. 2006, Sheldon et al. 2009). We will discuss this idea further in Section 6, arguing that it offers the most reliable route to calibrating cluster mass-observable relations and has the potential to sharpen cosmological parameter constraints significantly. Cluster-galaxy lensing may also be a useful tool for calibrating uncertainties in shear calibration and photometric redshifts, since the shear signal in the cluster regime is stronger and the cluster photometric redshifts themselves are usually well determined.

5.2.7. Method IV: Cosmography*

The previous sections motivate us to ask whether there is a way to combine the observational advantages of GGL with the model independence of the shear power spectrum. There is, although there is a large price to pay: one can only obtain geometrical information.

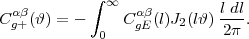

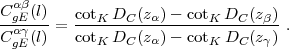

The idea is to consider narrow slices of galaxies centered at redshifts zα < zβ < zγ and measure the lensing of galaxies in slices zβ and zγ by galaxies in the foreground slice zα. The ratio of the galaxy-shear cross-spectra is, using equation (83),

|

(85) |

One can see that all dependence on the power spectra and the distribution of galaxies has been cancelled, allowing a purely geometric test of cosmology. This is called the cosmography or shear-ratio test (Jain and Taylor 2003, Bernstein and Jain 2004).

One can see from equation (85) that cosmography can determine the cotk Dc(z) relation up to any affine transformation, i.e. transformations of the form

|

(86) |

which leave the ratios of differences of cotk Dc(z)s unaffected. (Recall that cotk Dc = 1 / Dc in a flat universe.) It is clear that a1 is the familiar overall rescaling degeneracy: cosmography measures only dimensionless ratios and cannot distinguish two models with different H0 butthe same values of Ωm, w, etc. Precisely the same degeneracy afflicts the supernova DL(z) relation because the absolute magnitude of a Type Ia supernova is not known a priori. The a0 degeneracy is trickier, arising from the fact that ∞ is not a special distance in lensing problems. 38 Finally, since only cotk Dc(z) is measured, cosmography cannot by itself provide a model-independent measurement of the curvature of the universe. But aside from these three degeneracies — a1, a0, and K — the entire geometry of the universe over the range of redshifts observed is measurable.

Unfortunately, the aforementioned degeneracies are similar in functional form to the effects of Ωm and w, and they have severely limited the application of cosmography thus far. This is particularly true for observations restricted to low redshift: if one Taylor expands the distance as tank Dc(z) = c1 z + c2 z2 + c3 z3 + ... then any cosmological model is degenerate with one that has (c1, c2) = (1, 0), and hence one must go through at least the z3 term before cosmography provides any useful information. For example, at (zα, zβ, zγ) = (0.25, 0.35, 0.70), the difference in the shear ratio (eq. 85) between an Ωm = 0.3 flat ΛCDM cosmology and a pure CDM Ωm = 1 cosmology is only 1%! In early work (Mandelbaum et al. 2005) cosmography was therefore used as a test for shear systematics rather than a cosmological probe.

The outlook for cosmography is much brighter as we probe to larger redshifts, or if we consider dark energy models with complicated redshift dependences that cannot be mimicked by the degeneracy of equation (86). A particularly promising possibility is to use cosmography with lensing of the anisotropies in the CMB (z = 1100) to obtain a much longer lever arm (Acquaviva et al. 2008). In principle one can also apply the cosmography method to strong gravitational lenses (see Section 7.10 below). Here the challenge is that different sources probe different locations in the lens, so one must be able to constrain the lens potential extremely well to extract useful cosmographic constraints.

5.2.8. Method V: Non-Gaussian Statistics*

The primordial density fluctuations in the universe were very nearly Gaussian, as evidenced by the CMB. In this case, the fluctuations are fully described by the power spectrum, and this has become the common language of CMB observations. However, nonlinear evolution makes the matter fluctuations and hence the lensing shear in the low-redshift universe highly non-Gaussian on small and intermediate scales. Therefore, many other statistical measures of the shear field have been proposed, the most popular of which is the bispectrum.

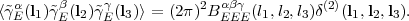

The bispectrum is obtained by taking the product of three Fourier modes:

|

(87) |

Statistical homogeneity forces the three wave vectors involved to sum to zero so the bispectrum is actually a function of the triangle configuration; rotational and reflection symmetry then tell us that it depends only on the side lengths (l1, l2, l3) 39, which must satisfy the triangle inequality. Because there are 2 shear modes (E and B), there are actually 4 types of bispectrum: EEE, EEB, EBB, and BBB, but only EEE can be produced cosmologically. Limber's equation expresses it in terms of the 3-dimensional matter bispectrum,

|

(88) |

The bispectrum contains information equivalent to the shear 3-point correlation function. The theory of transformations between the two and the implied symmetry properties have been extensively studied (Zaldarriaga and Scoccimarro 2003, Schneider and Lombardi 2003, Takada and Jain 2003, Schneider et al. 2005). Halo model based descriptions of the 3-point function are also available (e.g. Cooray and Hu 2001).

The original motivation to study the WL shear bispectrum was to break the degeneracy between Ωm and σ8 (e.g. Bernardeau et al. 1997, Hui 1999, Takada and Jain 2004). At low redshift, and on large scales where perturbation theory applies, the WL power spectrum is proportional to Ωm2 σ82, whereas the bispectrum is proportional to Ωm3 σ84; it contains three powers of the shear and hence three powers of Ωm, but the matter bispectrum is generated by nonlinear interactions and is proportional to the square of the matter power spectrum, i.e., to σ84 rather than σ83. Unfortunately, this route to degeneracy breaking has proven difficult because of the low signal-to-noise ratio and high sampling variance of the bispectrum and because the degeneracy directions of the power spectrum and bispectrum are almost parallel in the (Ωm, σ8) plane. A more interesting application of the WL bispectrum in future surveys may be as a constraint on modified gravity theories, though this has not yet been well studied.

5.3. The Current State of Play

Weak lensing as a cosmological probe is only a decade old, although the ideas go back much further. Zwicky (1937) famously suggested gravitational lensing as a tool to determine cluster masses (although the discussion focused on strong lensing). We separately consider here the more recent history of cosmic shear studies, and of galaxy-galaxy lensing as a cosmological probe. Also the techniques and applications associated with lensing outside the optical bandpasses are sufficiently different that we place them in a separate section. Lensing by clusters is considered in the cluster section (Section 6).

Kristian (1967) described an initial attempt to measure statistical cosmic shear using photographic plates taken on the Palomar 5 m telescope. He even correctly identified intrinsic alignments as a systematic error, and noted that the distance dependence could be used to separate them from true cosmic shear. Interestingly, the objective of this analysis was to search for cosmological-scale gravitational waves or other large-scale anisotropies (Kristian and Sachs 1966). The author set a limit on the magnetic part of the Weyl tensor 40 of ≲ 200 H0-2, which he describes as "about the best that can be done with this kind of measurement." Fortunately this has not remained the case - indeed it was improved upon by two orders of magnitude by Valdes et al. (1983).

The modern era of lensing studies was introduced by the availability of arrays of large-format CCDs. Mould et al. (1994) searched for cosmic shear and reached percent-level sensitivity, but did not detect a signal. Cosmic shear was finally detected in 2000 by several groups (Wittman et al. 2000, Bacon et al. 2000, Van Waerbeke et al. 2000), and in deeper but narrower data from HST (Rhodes et al. 2001, Refregier et al. 2002). Over the same period, several additional square degrees were observed with long exposure times in excellent seeing using ground-based telescopes (Van Waerbeke et al. 2001, Van Waerbeke et al. 2002, Bacon et al. 2003, Hamana et al. 2003). The first wide-shallow surveys were also carried out from the ground: the 53 deg2 Red-Sequence Cluster Survey (Hoekstra et al. 2002) and the 75 deg2 CTIO survey (Jarvis et al. 2003). These studies established the existence of cosmic shear, but at a level far below that which would be expected in Ωm ~ 1 models normalized to the CMB. The large error bars in early studies meant that only a single amplitude could be measured, yielding a constraint on the combination σ8(Ωm / 0.3)ν, where the exponent ν varied between 0.3 and 0.7 depending on the scale and depth. In the first detection of the cosmic shear bispectrum, achieved with the VIRMOS-DESCART survey, Pen et al. (2003) measured the skewness of the filtered shear signal and used it in combination with the power spectrum to rule out large-Ωm, low-σ8 solutions, finding Ωm < 0.5 at 90% confidence. The deep COMBO-17 survey first detected the evolution of σ8 as a function of cosmic time (Bacon et al. 2005).

However, the early studies of cosmic shear were not free of trouble. As one can see from Table 3, while most were broadly in agreement with σ8 in the 0.7-0.9 range, a detailed comparison shows that the measurements were not all consistent. This discrepancy stimulated discussions about a number of possible ancillary issues with the data, such as the role of intrinsic alignments, whether the source redshift distribution N(z) was properly calibrated, and whether the models for the nonlinear power spectrum and assumptions about the P(k) shape parameter Γ could be leading to discrepancies. More seriously, most of the early measurements contained B-mode signals at levels not far below the E-mode. This was a clear signal of contamination of non-cosmological origin, probably PSF correction residuals. Also, intrinsic alignments of galaxies were detected at high significance even in the linear regime, at a level that represented a potentially serious systematic error even for then-ongoing surveys (Mandelbaum et al. 2006).

It was clear by 2006 that weak lensing was a very hard observational problem and that a great deal of work lay ahead to turn it into a precision cosmological probe. This resulted in a reduction in the rate of new cosmic shear results, the reorganization of the field into larger teams, and detailed looks at systematic errors ranging from optical distortions in telescopes to intrinsic galaxy alignments. Several wide-field optical surveys were ongoing at the time, including the deep 170 deg2 CFHT Legacy Survey (for which cosmic shear was a key science driver) and the very deep multiwavelength COSMOS survey with high-resolution optical imaging from HST/ACS (Massey et al. 2007b, Schrabback et al. 2010). The CFHTLS presented some early results (Hoekstra et al. 2006, Semboloni et al. 2006a, Fu et al. 2008), but following this there was a rather bleak period of time. No new ground-based wide-field cosmic shear results were published, and no new large surveys were undertaken with HST, nor do future large HST weak lensing surveys seem likely. 41

In the past five years, however, great progress has been made in overcoming the difficulties that at first appeared so daunting. The community made a massive investment in algorithms to determine and correct for PSF ellipticities (we will review some of these in Section 5.5), and in investigating the physics that determines the PSF, including such complications as atmospheric turbulence (Heymans et al. 2012a). Equally important, these methods were tested in public challenges on simulated data (STEP1, Heymans et al. 2006; STEP2, Massey et al. 2007a; GREAT08, Bridle et al. 2010; GREAT10, Kitching et al. 2010; see further dicussion in Section 5.5). Progress was also made on astrophysical systematic errors. We learned that large-scale intrinsic galaxy alignments are strongest for luminous red galaxies (Hirata et al. 2007, Mandelbaum et al. 2011), and that the linear alignment model, once considered a crude analytical tool (Catelan et al. 2001), is in fact an excellent description of the observations of early-type galaxies at ≥ 10h-1 Mpc scales (Blazek et al. 2011).

As a result of this great effort by the community, the Stage II weak lensing results are finally coming to fruition and yielding large data sets that pass the standard systematics tests (e.g., B-modes consistent with zero). Two groups (Lin et al. 2012, Huff et al. 2011 have performed a cosmic shear measurement using the Sloan Digital Sky Survey deep co-added region — a 120-degree long stripe observed many times over the course of three years as part of the SDSS-II supernova survey. These analyses used different methods to co-add their data and correct for the PSF ellipticity, and they imposed different selection cuts and hence had different redshift distributions, yet the results were in agreement (and slightly more than 1σ below the WMAP prediction for σ8). The largest of the Stage II weak lensing programs was the CFHT Legacy Survey. After a thorough analysis, the lensing results and cosmological implications were recently published (Heymans et al. 2012b, Benjamin et al. 2012, Erben et al. 2012, Kilbinger et al. 2012, Miller et al. 2013). They appear consistent with the standard ΛCDM cosmology with WMAP-derived initial conditions, with the amplitude σ8 measured to ± 0.03.

A summary of the current status of optical cosmic shear results is shown in Table 3.

| Reference | Telescope/instrument | Area | Number of | Result |

| (deg2) | galaxies | |||

| Bacon et al. (2000) | WHT/EEV-CCD | 0.5 | 27k | σ8 = 1.5 ± 0.5 (@ Ωm = 0.3) |

| Van Waerbeke et al. (2000) | CFHT/UH8K+CFH12K | 1.75 | 150k | Detectiona |

| Wittman et al. (2000) | Blanco/BTC | 1.5 | 145k | Detectionb |

| Rhodes et al. (2001) | HST/WFPC2 | 0.05 | 4k | σ8(Ωm / 0.3)0.48 = 0.91-0.30+0.25 |

| Van Waerbeke et al. (2001) | CFHT/CFH12K | 6.5 | 400k | σ8(Ωm / 0.3)0.6 = 0.99-0.10+0.08 (95%CL)c |

| Hoekstra et al. (2002) | CFHT/CFH12K + Blanco/Mosaic II | 53 | 1.78M | σ8(Ωm / 0.3)0.55 = 0.87-0.23+0.17 (95%CL) |

| Refregier et al. (2002) | HST/WFPC2 | 0.36 | 31k | σ8=0.94 ± 0.14(@ Ωm = 0.3, Γ = 0.21) |

| Bacon et al. (2003) | Keck II/ESI + WHT | 1.6 | σ8(Ωm / 0.3)0.68 = 0.97 ± 0.13 | |

| Brown et al. (2003) | MPG ESO 2.2m/WFI | 1.25 | σ8(Ωm / 0.3)0.49 = 0.72±0.09d,e | |

| Jarvis et al. (2003) | Blanco/BTC+Mosaic II | 75 | 2M | σ8(Ωm / 0.3)0.57 = 0.71-0.16+0.12 (2σ) |

| Hamana et al. (2003) | Subaru/SuprimeCam | 2.1 | 250k | σ8(Ωm / 0.3)0.37 = 0.78-0.25+0.55 (95%CL) |

| Rhodes et al. (2004) | HST/STIS | 0.25 | 26k | σ8(Ωm / 0.3)0.46 (Γ / 0.21)0.18 = 1.02 ± 0.16 |

| Heymans et al. (2005) | HST/ACS | 0.22 | 50k | σ8(Ωm / 0.3)0.65 = 0.68±0.13 |

| Massey et al. 2005 | WHT/PFIC | 4 | 200k | σ8(Ωm / 0.3)0.5 = 1.02 ± 0.15 |

| Hoekstra et al. 2006 | CFHT/MegaCam | 22 | 1.6M | σ8 = 0.85 ± 0.06@ Ωm = 0.3 |

| Semboloni et al. 2006a | CFHT/MegaCam | 3 | 150k | σ8 = 0.89 ± 0.06@ Ωm=0.3 |

| Benjamin et al. 2007 | Variousg | 100 | 4.5M | σ8(Ωm / 0.3)0.59 = 0.74 ± 0.04 |

| Hetterscheidt et al. (2007) | MPG ESO 2.2m/WFI | 15 | 700k | σ8 = 0.80 ± 0.10 @ Ωm = 0.3 |

| Massey et al. (2007b) | HST/ACS | 1.64 | 200k | σ8(Ωm / 0.3)0.44 = 0.866-0.068+0.085 |

| Schrabback et al. (2007) | HST/ACS | 0.4 | 100k | σ8 = 0.52-0.15+0.11(stat) ± 0.07(sys) @ Ωm = 0.3f |

| Fu et al. (2008) | CFHT/MegaCam | 57 | 1.7M | σ8(Ωm / 0.3)0.64 = 0.70 ± 0.04 |

| Schrabback et al. (2010) | HST/ACS | 1.64 | 195k | σ8(Ωm / 0.3)0.51 = 0.75 ± 0.08 |

| Huff et al. (2011) | SDSS | 168 | 1.3M | σ8 = 0.636-0.154+0.109 @ Ωm = 0.265h |

| Lin et al. (2012) | SDSS | 275 | 4.5M | σ8(Ωm / 0.3)0.7 = 0.64-0.12+0.08h |

| Jee et al. (2013) | Mayall+CTIO/Mosaic | 20 | 1M | σ8 = 0.833 ± 0.034i |

| Kilbinger et al. (2012) | CFHT/MegaCam | 154 | 4.2M | σ8(Ωm / 0.27)0.6 = 0.79 ± 0.03 |

| a Consistent with Ωm = 0.3(Λ or open), cluster normalized; Ωm = 1, σ8 = 1 excluded. | ||||

| b Consistent with ΛCDM or OCDM, but not COBE normalized Ωm = 1. | ||||

| c Reanalysis by Van Waerbeke et al. (2002) gives σ8 = 0.98 ± 0.06(Ωm = 0.3, Γ = 0.2, 68%CL). | ||||

| d Reanalysis by Heymans et al. (2004) to correct for intrinsic alignments gives σ8(Ωm / 0.3)0.6 = 0.67 ± 0.10. | ||||

| e Brown et al. (2005) used a subset of this data to show that the matter power spectrum increased with time. | ||||

| f In the Chandra Deep-Field South; the authors warn that this field was selected to be empty, hence σ8 may be biased low. | ||||

| g A combination of 4 previously published surveys. | ||||

| h Both based on the same raw SDSS data, but with analyses and reduction pipelines by 2 different groups. | ||||

| i Other parameters fixed to WMAP 7-year values. | ||||

5.3.2. Galaxy-galaxy lensing as a cosmological probe

Like cosmic shear, galaxy-galaxy lensing is an old idea. The earliest

astrophysically interesting upper limit was that of

Tyson et

al. (1984),

who used the images of 200,000 galaxies measured by the now-obsolete

method of digitizing photographic plates to exclude extended isothermal

halos with vc > 200 km s-1 around an

apparent magnitude-limited sample

of galaxies. Galaxy-galaxy lensing was observed at ~ 4σ by

Brainerd et

al. (1996),

the first clear detection of cosmological weak lensing.

Their analysis used a total of 3202 lens-source

pairs in a field of area 0.025 deg2. Several other detections

followed this in deep surveys with limited sky coverage

(Hudson et

al. 1998,

Smith et al. 2001,

Hoekstra et

al. 2003).

However the full scientific exploitation of

the galaxy-galaxy lensing signal — in contrast to cosmic shear

— favors wide-shallow surveys over deep-narrow surveys, since the

S/N in the shape-noise limited regime scales as only

source1/2 rather than

source1/2 rather than

source.

Therefore, in the decade of the 2000s the

leading galaxy-galaxy lensing surveys became the 92 deg2

Red-Sequence Cluster Survey (RCS;

Hoekstra et

al. 2004,

Hoekstra et

al. 2005,

Kleinheinrich

et al. 2006)

and eventually the 104 deg2 SDSS (references

below). The availability of

spectroscopic redshifts in the latter allowed the signal from low-redshift

galaxies to be stacked in physical rather than angular coordinates, enabling

the detection of features as a function of transverse separation.

The spectroscopic survey also provided detailed environmental

information, measures of star-formation history, and full 3-dimensional

clustering data (e.g., correlation lengths and redshift-space distortions)

for the lens galaxies.

source.

Therefore, in the decade of the 2000s the

leading galaxy-galaxy lensing surveys became the 92 deg2

Red-Sequence Cluster Survey (RCS;

Hoekstra et

al. 2004,

Hoekstra et

al. 2005,

Kleinheinrich

et al. 2006)

and eventually the 104 deg2 SDSS (references

below). The availability of

spectroscopic redshifts in the latter allowed the signal from low-redshift

galaxies to be stacked in physical rather than angular coordinates, enabling

the detection of features as a function of transverse separation.

The spectroscopic survey also provided detailed environmental

information, measures of star-formation history, and full 3-dimensional

clustering data (e.g., correlation lengths and redshift-space distortions)

for the lens galaxies.

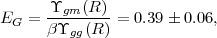

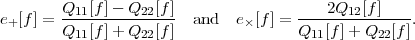

The SDSS remains the premier galaxy-galaxy lensing survey today, for both galaxy evolution and cosmology applications, and it likely will remain so until DES and HSC results become available. The SDSS Early Data Release, comprising only a few percent of the overall survey, already detected the galaxy-galaxy lensing signal with high significance (Fischer et al. 2000, McKay et al. 2001). Some of the major results of cosmological importance from the SDSS galaxy-galaxy lensing program have been:

|

(89) |

where Υ is a filtered correlation function (averaged over scales R = 10 - 50 h-1 Mpc) and β is the redshift-space distortion parameter of equation (43). The combination Eg is equal to Ωm / f(z) at the redshift of the lenses, for which GR predicts Ωm0.45(z = 0.32) = 0.408 ± 0.029. This measurement establishes that the peculiar velocities of galaxies are, to ~ 15% precision, in agreement with expectations based on the potential structure traced by lensing.

All of these measurements will become possible with much smaller error bars once the Stage III WL experiments are operational. We look forward in particular to much smaller error bars on b / r and Eg derived from the largest scales, as well as improvements on c(M).

5.3.3. Lensing outside the optical bands

All wavelengths of light are gravitationally lensed. The optical 44 is not special in this regard — rather, the emphasis on optical wavelengths has been technological, as this is the cheapest band in which to observe and resolve large numbers of galaxies at cosmological distances and obtain some redshift information. However, advances in technology in other wavebands have resulted in weak lensing being detected at several other wavelengths:

5.4. Observational Considerations and Survey Design

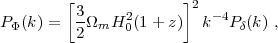

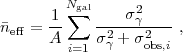

The forecasting of statistical errors on the cosmological parameters is much more involved for WL than for supernovae or BAO because of the complex dependence of the observables on the underlying model. Nevertheless, some intuition can be gained by making approximations to enable exact evaluation of the integrals. Specifically, we assume (i) a single source redshift zs; (ii) a power-law matter power spectrum,

|

(90) |

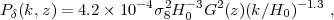

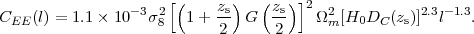

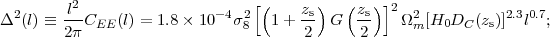

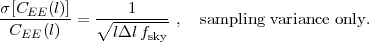

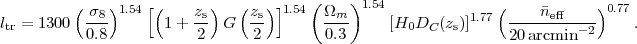

where the slope k-1.3 is chosen to match that of the ΛCDM power spectrum at a scale of ~ 10 Mpc and the normalization is chosen to give the correct σ8; (iii) evaluation of the normalization (1 + z)G(z) not at the true lens redshift zl (over which we integrate from 0 to zs) but at a "typical" lens redshift zs / 2; and (iv) a flat universe. Then equation (73) gives

|

(91) |

The variance per logarithmic range in l is

|

(92) |

this is a measure of the shear variance at a particular angular scale θ~ l-1. Recall that (1 + z)G(z) varies from ≈ 0.75 at z = 0 to one at high redshift (see Fig. 3). Since H0 enters only in the combination H0 Dc(z), and Dc(z) ∝ H0-1, we see again that the WL signal depend on relative rather than absolute distances.

In practice, equation (92) is only a rough guide because of deviations of Pδ(k) from a power law and the nonlinear enhancement of the matter power spectrum on small scales. Nevertheless, we can see several important features:

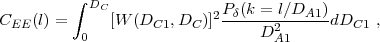

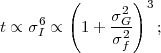

The statistical uncertainty on the shear power spectrum is determined by two factors: sampling variance at low l and shape noise at high l. Sampling variance uncertainty is associated with the fact that there are only a finite number N of Fourier modes in the survey area, and consequently the fractional uncertainty in the power can be no smaller than √2/N (where the 2 arises because power is the variance of γl, not the rms amplitude). If we measure the power spectrum in a bin of width Δl, then the number of modes is N = 2lΔl fsky, where fsky is the fraction of the sky observed. This corresponds to a sampling variance uncertainty

|

(93) |

If we measure modes up to some lmax, there are lmax2 fsky modes, and the sampling variance uncertainty in the normalization of the power spectrum is √2 fsky-1/2 lmax-1.

At high l, the errors on the WL power spectrum become dominated not by the number of modes available but by how well each mode can be measured with a finite number of galaxies. Individual galaxies are not round, and so a shear estimator applied to a galaxy has an intrinsic scatter σγ ~ 0.2 rms in each component of shear (γ+ or γW), for typical galaxy populations with rms ellipticity erms ~ 0.4 per component. This phenomenon is known as shape noise. Since it is uncorrelated between distinct galaxies (at least as a first approximation), shape noise produces a white noise (l-independent) power spectrum 45,

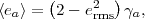

|

(94) |

where  eff

is the effective number of galaxies per

steradian

(this is the true number of galaxies with a penalty applied for objects

where the observational measurement error on the shear becomes comparable

to σγ; see below). Since the cosmic shear

CEE(l) is

decreasing with l, there is a transition scale

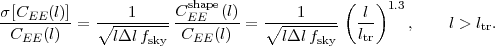

ltr where the shape

noise becomes comparable to the lensing signal.

Using equation (92), we estimate

eff

is the effective number of galaxies per

steradian

(this is the true number of galaxies with a penalty applied for objects

where the observational measurement error on the shear becomes comparable

to σγ; see below). Since the cosmic shear

CEE(l) is

decreasing with l, there is a transition scale

ltr where the shape

noise becomes comparable to the lensing signal.

Using equation (92), we estimate

|

(95) |

At angular scales smaller than θ ~ ltr-1, lensing cannot detect (at S/N > 1) a typical fluctuation in the density field. 46 Statistical measurements are still possible, however, and the power spectrum can be measured to an accuracy of √2/N CEEshape(l) where N is the number of modes. Thus, in the shape-noise limited regime,

|

(96) |

One can see from this equation that the fractional uncertainty on CEE(l) in bins of width Δl / l ~ 1 increases with l for l > ltr. Therefore we arrive at the important conclusion that the power spectrum is best measured at the transition scale ltr: on larger scales sampling variance degrades the measurement even though individual structures are seen at high signal-to-noise ratio (SNR), and on smaller scales shape noise dominates. The aggregate uncertainty in the normalization of the power spectrum is thus of order

|

(97) |

A full-sky experiment 47 reaching tens of galaxies per arcmin2 at redshifts of order unity would have ltr ~ 1000 and so could measure the normalization of the power spectrum to a statistical precision of order 0.1%. This would be an unprecedented measurement of the strength of matter clustering. However, as we will see below, there are substantial statistical and systematic hurdles to such an experiment.

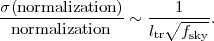

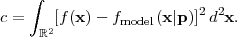

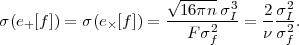

Finally, we consider galaxies measured at finite SNR. In the above analysis, we assumed that each galaxy provided an estimate of the shear with uncertainty σγ. At finite SNR there is also measurement noise σobs, so that each galaxy provides an estimate with error (σγ2 + σobs2)1/2. Using inverse-variance weighting, in the finite-SNR case the shape noise becomes equation (94), with the effective source density

|

(98) |

where A is the survey area and the sum is over the galaxies. This

is always less than

=

Ngal / A. The

effective source density

=

Ngal / A. The

effective source density

eff is

limited in part by the depth of the survey:

σobs,i typically scales with integration time as

∝ t-1/2,

but once σobs,i ≪

σγ one no longer continues

to gain. How long does this take? In

Section 5.5.3, we will show that

for nearly circular, Gaussian galaxies

48

eff is

limited in part by the depth of the survey:

σobs,i typically scales with integration time as

∝ t-1/2,

but once σobs,i ≪

σγ one no longer continues

to gain. How long does this take? In

Section 5.5.3, we will show that

for nearly circular, Gaussian galaxies

48

|

(99) |

where rpsf and rgal are the half-light radii of the PSF and the galaxy, respectively, and ν is the detection significance (in σs). Thus for galaxies with a similar size as the PSF, we expect to reach σobs = 0.1 (measurement noise half of shape noise) after integrating long enough to see the galaxy at 20σ.

In principle, the summation in equation (98) is over all objects detected as extended sources, and any galaxy could be used if its detection significance is high enough. In practice, this is dangerous: while one might hope to obtain σobs = 0.1 on a galaxy with rgal = 0.5rpsf and a 50σ detection, the "ellipticity measurement" on this galaxy consists of measuring the small deviation of the image from the PSF. Such a procedure tends to magnify systematic errors in the PSF model and is usually unadvisable. Therefore, most WL surveys impose a cutoff on rgal / rpsf or some similar property.

5.4.2. The Galaxy Population for Optical Surveys

The design of a WL survey must begin by considering the population of galaxies. We will focus here on the population in the 3-dimensional space of redshift z, effective radius reff, and apparent AB magnitude in the I-band (a convenient choice for shape measurement with red-sensitive CCDs from the ground). The plots shown here are based on the mock catalog of Jouvel et al. (2009), which uses real galaxies from the COSMOS survey but fills in missing information for individual galaxies (e.g. redshifts or line fluxes) with photo-zs and models.

Figure 17 shows the mean surface density of galaxies and the median source redshift as a function of limiting magnitude Iab for effective radius cuts of 0.15", 0.248", and 0.35". In general, one would like to use galaxies larger than the PSF to avoid amplification of systematics when applying a PSF correction to the shapes. The "effective radius" (EE50, for 50% encircled energy) of a typical ground-based PSF is ~ 0.35" under good conditions, corresponding to a FWHM of ~ 0.7". The 0.248" cut is a factor of √2 smaller, appropriate if one can make use of galaxies smaller than the PSF or has sufficient itendue to do the entire survey under the very best seeing conditions. Measuring galaxies at reff = 0.15" is well beyond present ground-based cosmic shear survey capabilities, for both algorithmic and PSF-determination reasons, and will likely require a space (or balloon) based platform.

5.4.3. Photometric Redshifts and their Calibration

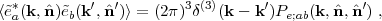

Modern WL analyses all use photometric redshifts in some way. They are central to tomography and cosmography measurements, and they are also needed in most schemes to remove the intrinsic alignment contamination. In the case of GGL, photo-zs are used to select sources that are actually behind the lens plane (sources in front of the lens are unlensed and dilute the signal, whereas sources at the same redshift as the lens can contribute intrinsic alignments).

One can characterize the photo-z distribution using the joint probability distribution for the photo-z zp and the true redshift z for some sample of galaxies, P(zp, z). In the case of lensing, we care about the conditional probability distribution, P(z|zp). This distribution is sometimes characterized by its conditional bias and scatter,

|

(100) |

but it is always non-Gaussian and in practice there are "outliers" or

"catastrophic failures" with |z - zp| ~

(1). The

conditional probability distribution is not symmetric: Bayes's theorem

tells us that

(1). The

conditional probability distribution is not symmetric: Bayes's theorem

tells us that

|

(101) |

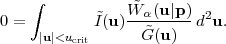

so a photo-z that is is "unbiased" in the conventional sense of <zp>|z = z may still have δ z(zp) ≠ 0. It is not required that photometric redshifts have δ z(zp) = 0, but one does need to know the value of δ z(zp) to relate observations to model parameters. From the simplified example discussed in Section 5.4.1, we can see that a systematic error of ~ 0.01 in δ z(zp) / zp will lead to a normalization error in the matter power spectrum of the order of 2%. Similarly, if 1% of galaxies in a source redshift bin zs are actually outliers with redshift z ≪ zs, they will dilute the expected lensing signal by 1%, and the power spectrum by 2%.

If the full distribution P(z|zp) is known, then the shear cross-power spectra for any pair of redshift slices can be determined for a given cosmological model. However, the use of photo-zs to suppress intrinsic alignments (Section 5.6.1) does not work if the intrinsic alignments of the outliers are significant, or even if the scatter is large enough that galaxies can evolve significantly within a redshift bin, so there is a strong motivation to reduce them to the minimum level possible. Thus lensing programs must face two challenging problems: (i) obtaining a low outlier rate, and (ii) determining P(z|zp) to sub-percent precision.

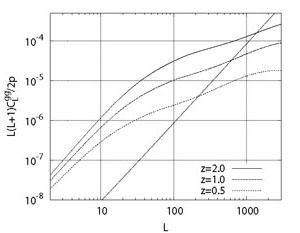

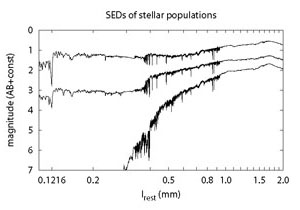

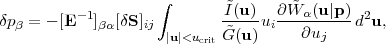

To understand how to reduce the outlier rate, we must investigate how photo-zs work: they take several broad-band fluxes from a galaxy and try to identify spectral features (see Fig. 18). At low redshifts, the strongest feature in the optical part of a galaxy spectrum is the break around 3800-4000E, arising from metal line absorption in early-type galaxies and the Balmer continuum (plus high-order lines) in late-type galaxies. As the redshift of the galaxy increases, this feature moves to the red, and above redshifts of z ~ 1.3 it is no longer useful for optical photo-zs (depending on the SNR in z and y bands). At z ≥ 2, the Lyα break redshifts into the optical bands and can be used - but it is possible to confuse it with the Balmer/4000E break. This is the principal example of a photo-z degeneracy.

|

Figure 18. The SEDs of three stellar populations are shown: a single burst at age 25 Myr (top); a continuous star-forming population of 6 Gyr age (middle); and a single burst at 11 Gyr (bottom). All have solar metallicity. Blueward of Lyα they have been adjusted for an IGM transmission factor of 0.8 (appropriate for z = 2.25; see McDonald et al. 2006), but other corrections (dust, nebular emission) are not included. The models are obtained from Bruzual and Charlot (2003). Note the break at ~ 0.37-0.40 μm present in all models, albeit with varying shape, strength, and precise location. |

The above discussion suggests that to reduce outliers across the whole range of redshifts used for WL surveys (z = 0 to ~ 3) one desires coverage from blueward of the Balmer/4000E feature (i.e. a u-band) through the near-IR (J + H bands), so that either the Balmer/4000E feature or Lyα is robustly identifiable. The optical bands can be easily observed from the ground. As one moves redward, however, the sky brightness as observed from the ground increases rapidly, and obtaining the J + H band photometry matched to the depth of future surveys is only practical from space.

One is then left with the problem of measuring the photo-z error

distribution. The most direct and conceptually simplest

way to do this is to collect

spectroscopic redshifts of a representative subsample of the sources used

for WL. This is, however, very expensive in terms of telescope time: many

galaxies have weak or absent emission lines (particularly if one

restricts to the optical range),and so one searches for absorption

features of faint (i ~ 22-25) galaxies.

Stage III/IV experiments may require

(105) redshifts to

calibrate photo-zs at the level of their statistical errors,

and we desire sub-percent failure

rates because the failures are likely concentrated at specific redshifts.

These failure rates are far below those that have actually been achieved by

spectroscopic surveys at the desired magnitudes.

(105) redshifts to

calibrate photo-zs at the level of their statistical errors,

and we desire sub-percent failure

rates because the failures are likely concentrated at specific redshifts.

These failure rates are far below those that have actually been achieved by

spectroscopic surveys at the desired magnitudes.

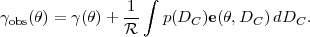

An alternative idea (Newman 2008) is to use the 2-D angular cross-correlation of the photo-z galaxies with a large area spectroscopic redshift survey, which can target brighter galaxies and/or the subset of faint galaxies that have strong emission lines. For a bin of galaxies with photo-z centered on zp, the amplitude of cross-correlation is proportional to bspec(z)bphot(z, zp)P(z|zp), where bspec and bphot are the clustering bias factors of the spectroscopic and photo-z galaxies, respectively, at redshift z. The auto-correlations of the spectroscopic and photo-z samples provide additional constraints on the bias factors, and one also has the normalization condition ∫P(z|zp) dz = 1 for each zp bin. The key uncertainty in this approach is constraining the full redshift-dependent bphot(z, zp); if the bias varies with photo-z error (e.g., because high-bias red galaxies and low-bias blue galaxies have different photo-z error distributions) then this dependence must be modeled to extract P(z|zp) (Matthews and Newman 2010). If one is using intermediate or small scale clustering, then one must also allow for scale-dependent bias and for cross-correlation coefficients lower than unity between different galaxy populations, which would lower the amplitude of cross-correlations relative to auto-correlations. Finally, the approach requires a spectroscopic sample that spans the full redshift range of the photometric sample; a quasar redshift survey may provide sufficient sampling density for probing the high-redshift tail of P(z|zp). The cross-correlation technique has to date not been used for WL surveys, but it has been used to measure other redshift distributions — see, e.g., the application to radio galaxies by Ho et al. (2008). Adding galaxy-galaxy lensing measurements to the galaxy clustering measurements may improve the robustness and accuracy of the cross-correlation approach and allow some degree of "self-calibration" without relying on an external spectroscopic data set (Zhang et al. 2010).

Overall, the problem of measuring P(z|zp) to the required accuracy remains one of the greatest challenges for future WL projects. Given the difficulty of assembling an ideal spectroscopic calibration sample, the treatment of photo-z distributions in Stage III and Stage IV WL analyses is likely to involve some combination of direct calibration, cross-correlation calibration, empirically motivated models of galaxy SEDs, and marginalization over remaining uncertainties in parameterized forms of P(z|zp). Tomographic WL measurements themselves have some power to constrain these distributions (at the cost of some leverage on cosmological parameters), and weak lensing by clusters that have well determined individual redshifts may also be a valuable tool.

An interesting alternative to shape measurement in the optical is to work in the radio part of the spectrum, where late-type galaxies are observable via their synchrotron emission. In order to achieve the required resolution, one needs to use a large interferometer: a fringe spacing of 1" is achievable at 1 GHz with a baseline of 60 km. One also needs a large collecting area to obtain high-SNR images on a competitive number of galaxies; the SKA could in principle measure billions of galaxies (Blake et al. 2004). But let us suppose such an interferometer were built. What would it do for WL? In principle, it could solve many problems at once:

It is also possible to do lensing analyses on the CMB. Here there are several advantages: the source redshift is known exactly from cosmological parameters, zsrc = 1100; theory predicts exactly the statistical distribution of hot and cold spots on the CMB, so there is no intrinsic alignment effect; and the PSF (or "beam" shape) of microwave experiments tends to be far more stable than in the optical. The CMB is a diffuse field rather than a collection of objects (galaxies), so reconstructing the shear requires a different mathematical formalism than for galaxy lensing. The basis for this formalism is two-fold:

Until recently, because of SNR issues, lensing of the CMB had been detected only in cross-correlation with foreground galaxies (Smith et al. 2007, Hirata et al. 2008, Bleem et al. 2012). The advent of the arcminute-scale CMB experiments ACT and SPT (primarily motivated by cluster cosmology using the SZ effect) has enabled robust detections of the power spectrum of the CMB lensing field (Das et al. 2011, van Engelen et al. 2012).

Because CMB lensing only provides a single source slice, it is unlikely to ever replace galaxy lensing. However, in combination with galaxy lensing, it can provide the most distant source slice for tomography (Hu 2002b) and cosmography (Acquaviva et al. 2008).

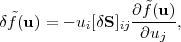

So far we have treated shear measurement as a black box: it takes in an image of the galaxy and some knowledge of the instrument, and it returns γ+,W, an unbiased estimator for the true shear γ with some uncertainty per component σγ. This black box is very complicated on the inside, as one needs an accurate and robust shape measurement algorithm, and even providing the necessary inputs to such an algorithm, particularly an accurate determination of the PSF, has proven to be difficult. After a brief overview of these algorithms, we describe the idealized problem of measuring shear from an ensemble of galaxy images, then turn to a more detailed discussion of the challenges that arise in practice.

There are two general strategies for shape measurement methods in common use today. One class of methods is to measure moments of galaxies (in real or Fourier space), and relate, e.g., the mean quadrupole moment of galaxies to the shear. These methods started with ad hoc "PSF correction" prescriptions, but they have recently evolved toward methods that attempt to statistically close the hierarchy of moments of galaxies and PSFs in a model-independent way. The other class of methods is based on forward modeling: one adopts a model for a galaxy (e.g., an elliptical Sersic profile, or a linear combination of basis images), simulates the observational procedure, and minimizes χ2. Both approaches have their advantages and disadvantages. Much of the early WL work used moments-based methods, but for years a generally applicable PSF correction scheme seemed out of reach. Some of the more recent incarnations of the Fourier domain moments-based methods work for arbitrary distributions of galaxy and PSF profiles; however these are less mature in their practical implementation, and they impose stringent requirements on input data quality (e.g., sampling). The forward modeling methods can handle a much wider range of observational defects (e.g., under some circumstances one may even be able to measure a galaxy containing missing pixels), but they depend on a model for the galaxy being observed; one must carefully assess the impact of an insufficiently general model. Both strategies require exquisite knowledge of the PSF.

Currently there are many algorithms in use in each category. The prototype moments-based method was that of Kaiser, Squires, and Broadhurst (KSB; Kaiser et al. 1995; improved by Luppino and Kaiser 1997, Hoekstra et al. 1998). Many improvements of these methods have been made — e.g., in computing better conversion factors from shear to quadrupole moments 50 (Semboloni et al. 2006b). Elliptical-weighted moments and the concept of shear-covariance were introduced by Bernstein and Jarvis (2002) and have been used extensively in SDSS (Hirata and Seljak 2003b). Further progress was made by moving to moments in Fourier space, where the PSF "correction" becomes trivial (one divides by the Fourier transform of the PSF, at least in the regions where it is nonzero). This has culminated in the development of a shape measurement method that is exact in the high-SNR limit (Bernstein 2010). We discuss this method and its development in Section 5.5.2. An early example of the model-fitting approach was im2shape (Bridle et al. 2002). More recently, Bayesian model fits have been introduced that are stable at lower SNR (Miller et al. 2007, Kitching et al. 2008); these are currently being applied to the CFHTLS. The "shapelet" basis (Refregier 2003, Refregier and Bacon 2003), derived from energy eigenstates of a 2D quantum harmonic oscillator, is useful in both types of methods. The coefficients in a shapelet decomposition are moments, but one may also fit a model galaxy parameterized by its shapelet coefficients.

The various shape measurement algorithms have been tested and compared in blind simulations, such as the Shear Testing Program (STEP1/STEP2; Heymans et al. 2006, Massey et al. 2007a), GREAT08 (Bridle et al. 2010), and GREAT10 (Kitching et al. 2010). In most of these cases, the objective is to minimize both the shear calibration error m (i.e. the error in the response to a given input shear) and the spurious shear c (i.e., the shear measured by the algorithm on an unlensed sample of galaxies). The STEP2 simulations used typical ground-based PSFs and complex galaxy morphologies and found that many of the measurement methods had shear calibration errors |m| of one-to-several percent, and spurious shear |c| ranging from several × 10-4 to several × 10-3. This level of performance should thus be considered typical of the more mature, heavily used shear measurement algorithms, although recent methods have done better. On the other hand, the algorithmic errors are only a portion of the error budget in a WL experiment — most importantly, the early simulation tests did not require participants to recover the spatial variability of the PSF. Such a test is currently ongoing as part of the GREAT10 challenge (Kitching et al. 2010). Early results from GREAT10 are now available, but their significance is still being digested.

In the remaining portions of this section we will discuss the mathematical problem of shape measurement (Section 5.5.1) and the basis for some of the commonly used methods (Section 5.5.2) and their statistical errors (Section 5.5.3). We cannot of course do justice to every method that has been suggested or used. We have chosen to highlight the recent progress in Fourier-space methods, since in principle they provide an exact solution in the limit of high SNR and are thus ripe for further development and utilization (Bernstein 2010). There are some biases that can result even for perfect shape measurement (or galaxies measured with a δ-function PSF), including the noise-related biases and selection biases, which are probably present at some level for all known algorithms; these are discussed in Section 5.5.4. Finally Section 5.5.5 describes the determination of the PSF, which is taken as an input for any shape measurement algorithm.

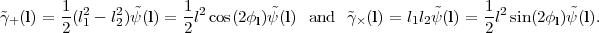

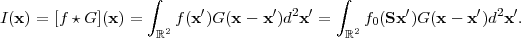

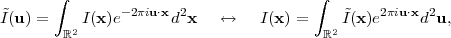

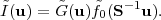

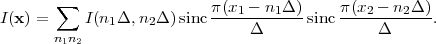

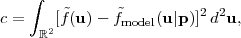

The idealized shape measurement problem is as follows: we have a galaxy in the source plane whose surface brightness is f0(x), where x is a 2-dimensional vector in the plane of the sky. It is first sheared, i.e., the galaxy in the image plane is f(x) = f0(Sx), where S is the shearing matrix,

|

(102) |

(We assume |γ| ≪ 1 here and work to linear order in γ for simplicity, although higher-order corrections will be important for Stage IV surveys.) We do not observe the actual image on the sky, however — we observe it through an instrument with PSF 51 G(x). The resulting image is

|

(103) |